Misinformation Detection Services

Co-inform analysis and rules

In general, the analysis models developed within Co-Inform require annotated data and rely on Machine Learning (ML) models. Such data is obtained from existing third-party data sources and from datasets shared with the Co-Inform consortium such as the data collected through the so called Data Collector (DC) module.

The input data is either given as URLs that need to be analyzed or as textual content as well as annotation labels if available that are used for training the MD services (e.g. information/misinformation labels). This data is collected from different information sources such as social media websites (e.g. Twitter, Reddit) or news websites. In general, this information is useful both for detecting the claims on social platforms (new ones and sharing known ones) and for analyzing their spread and evolution.

Besides a particular content or claim, the following external information is used as input:

-

- Historical data about claims. Fact-checking datasets are used both for spotting and for predictive models. This type of data needs to contain information about particular claims (e.g. who, what, where and when a claim has been done). Also, some information from the fact-checking process of known fact-checker sources: e.g., a label indicating the type of claim (e.g., factual, fake, satire, …), the author of the reviewing process;

- Information network and/or social network. Another important type of input that is used is the network associated with the author of a claim or the claim information network (i.e., the interconnection between different claims). This data will be particularly relevant for bot discovery and the Misinformation Flow Analysis and Prediction (MFAP) module.

In general, the models return probabilities of a given information (or URL or account) to be related to misinformative or informative content (or another relevant label).

Co-inform Credibility Model

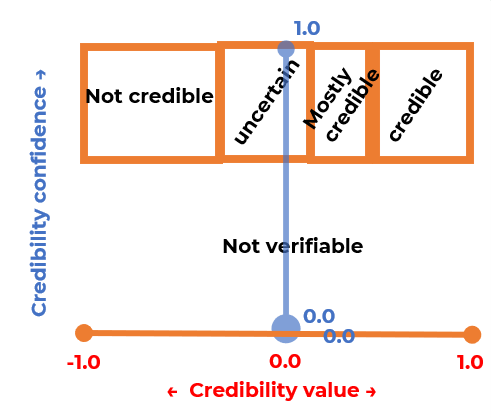

If you’ve used the Co-inform tools you will have encountered labels like “not credible”, “credibility uncertain”, “not verifiable” or “credible” and visualizations like:

Similarly, you may have encounter explanations like:

Credible (with 1.00 of credibility value, and 91% of confidence)

In this section, we will explain what these credibility values, confidence and labels mean.

Before we do so, it is important to note that we always speak about credibility, but avoid mentioning accuracy. The distinction is subtle, but important. Accuracy of a claim refers to whether the claim is true or not; this can only be ascertained by human fact-checkers who have direct access to sufficient evidence. The credibility of a claim refers to whether there are sufficient signals available that suggest the claim may be accurate. Credibility is therefore weaker (and thus easier to compute, although certainly not easy!) than accuracy. In Co-inform we believe that automated systems cannot determine accuracy, but they can sure try to determine credibility. This is why we use credibility.

Having established that we are trying to predict credibility, we next need a way to model credibility. Most fact-checkers use their own set of labels to summarise their fact-checks. For example, the Washington Post uses labels such as “One Pinocchio” or “Four Pinocchios”. FactCheckNI on the other hand uses labels like “accurate with considerations” or “inaccurate”. You can see that with dozens of reputable fact-checkers, each using their own set of labels (and defining them slightly differently), it’s not easy to understand what each label means, and this is already hard for humans. For machines, this is even harder. Machines, need either a small set of well-defined labels, or preferably a range of numeric values. Since we wanted to explore how AIs can help in identifying misinformation, for this reason, in Co-inform we chose to model credibility as a value in the range between -1.0 and 1.0. This is our credibility value.

The credibility value tells us how credible the tweet or claim is (based on the analysis our AI made). Essentially a value of -1.0 corresponds to something that is as “not credible” as possible and a value of +1.0 is something that is as “credible” as possible. Credibility is not binary, though, which is why we have a whole range of intermediate values. For example “accurate with considerations” as used by FactCheckNI means a claim is accurate, but only under certain conditions/assumptions. In terms of credibility, this means we can no longer assign it a value of 1.0, but we have to lower the value to, for example, 0.8. Similarly, some fact-checkers use labels “half-true” or “mixture” to rate claims which are neither accurate nor inaccurate. We can model this as a credibility value close to 0.0. We can model a “half-true” claim that tends to be less credible as, e.g. -0.1. You get the idea.

Great! so by modelling credibility as a range between -1.0 and 1.0 we can model all fact-checker labels! … well, not so fast. Some of the labels cannot be captured accurately by only modelling credibility. For example FactCheckNI uses label “unsubstantiated”, Snopes has “Unproven” and the Washington Post uses label “Verdict Pending”; these all mean that the fact-checker could not find sufficient evidence to determine the accuracy (or credibility) of a claim. This is where the credibility confidence comes into play. We can estimate how strong the credibility signals are that support or contradict a claim (as well as how likely it is that our AIs are incorrect) and we do this as a number between 0.0 and 1.0. In this case, 0.0 means that no credibility signals were found and 1.0 means that several credibility signals were found and the AI has, in the past, always predicted the credibility correctly given this amount of signals. In general, it is rare for AI systems to have 1.0 confidence as there is always some room for error, but they can be quite high (e.g. over 0.9).

By combining the two numeric values (credibility value and confidence), we are able to model credibility in a very rich way that captures most of the ratings that fact-checkers use. (Most? Yes, in some rare cases, fact-checkers take into account other considerations like whether a claim is harmful. In Co-inform we decided not to introduce a third value to try to capture this as this would have added a lot of complexity for an edge case.) In the next figure you can see how the Co-inform credibility labels map to the credibility value and confidence. In summary: if our AI has sufficient confidence, we return a credibility label, otherwise we just return “not verifiable”.

Modules and Rule Engine

Co-Inform policies have been defined based on Co-Inform stakeholder requirements, and aim to facilitate the flexible orchestration of rules that enable feedback responses between the user and the tools (Misinformation Detection Modules and Co-inform Plugin) which have been developed within the project. To achieve this, JEasyRules as rules engine API (RE) have been integrated into the plugin gateway to manage responses from the Misinformation Detection (MD) components and provide an appropriate response or action to the browser plugin (BP) in real time.

Currently, three different services or components have been developed as part of the MD module: 1) MisinfoMe, a service for identifying misinformation based on information sources and historical annotated data; 2) Claim Credibility, a claim detection service that extracts important text snippets from documents; and 3) Stance Detection, a service that assigns a veracity score to a tweet by analyzing its textual features and the stances towards it.

For example, we determine a claim as credible if:

-

- It contains a link and the source of the link is reputable

- Or the publisher of the tweet is reputable

- Or there is no similarity to related false claims in database

- We also check if the style of the tweet is similar to credible tweets

- And there is no/little disagreement towards the author of the tweet

Co-inform rules mapped these conditions to confidences and credibility values for each Misinformation Detection component.

-

- Reputation is determined by Misinfome.

- Similarity is determined by the claim similarity module.

- Style of post and agreement towards the post are determined by the content stance module.

Then the rule engine compares the credibility and confidence thresholds and activates the aggregation method that assigns final credibility of the post. Rule engine will be triggered by Co-inform servers whenever a request is received.

MisInfoMe module

The MisinfoMe module provides an analysis which is based on a historical record of fact-checks and public assessments of online media sources. The assessment of a tweet is done by looking at the relationship it has with known misinformation and low-credible sources. For this reason, this module is targeted towards tweets which share a link.

It is based on two types of ratings:

-

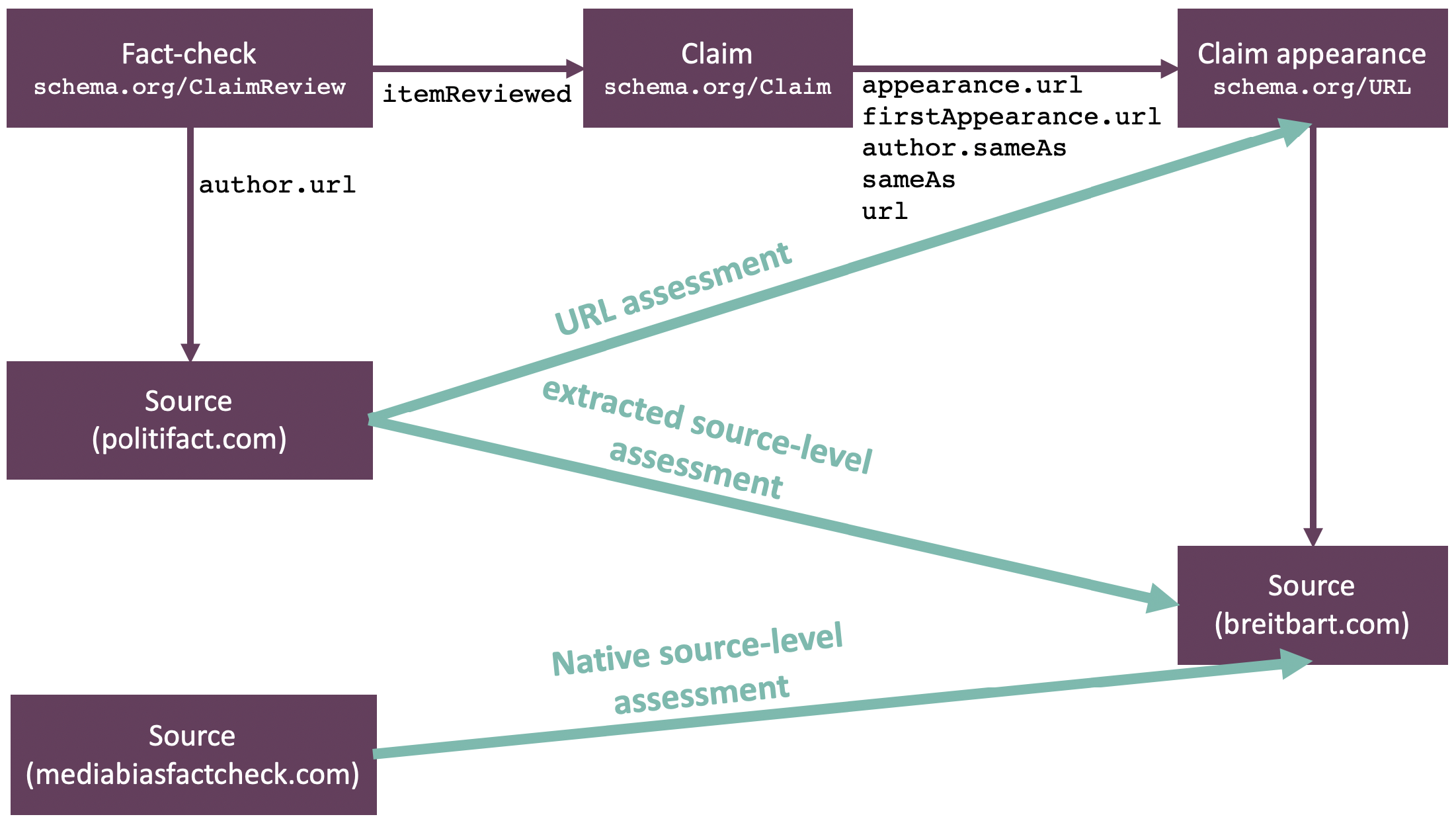

- Fact-checks: verified signatories of the IFCN code of principles create and share ClaimReview assessments, which are collected by us and processed to understand the outcome of the fact-check (e.g., correct/false) and the appearances of the claim. These appearances, annotated by the fact-checkers, are stored together with the label and corrective link (pointing to the article of the fact-check). In this way we can provide punctual corrections when the link is looked up for matches, and also aggregated to the website level to provide source-level statistics (e.g. naturalnews.com contains several stories which have been fact-checked as “not credible”, therefore it becomes a low-credible source).

- External source-level assessments: this data is aggregated from several existing tools (e.g. Media Bias/Fact Check, FakeNewsCodex, ECDC list of national authorities and public health agencies) which provide ratings of websites with high and low credibility. These ratings are merged together using a mapping methodology which unifies different scales of ratings into a pair of values (credibility and confidence).

By putting together the two types of ratings, the MisinfoMe module can be used to compute the credibility of a specific link that is shared inside a tweet. When a tweet is interrogated for its credibility, the two types of ratings are used together, but keeping a clear distinction: the relationship with low-credibility information can exist in two different natures:

-

- A tweet shares a specific story that has already been fact-checked. In this case the relevant fact-check is picked up and the module replies with high confidence. The specific story has been reviewed by a human fact-checker, so there is no room for doubt.

- A tweet shares a link that comes from a low-credible source. In this case, we have not enough information about the specific story shared in the link so the module can only reply with a low confidence value. The credibility value can be high or low depending on the reputation of the website, and this information is provided as a warning in the explanation of the module.

Claim Similarity module

The claim similarity service follows a “divide and conquer” strategy and is designed for:

-

- identifying the main parts of the input document (e.g. a tweet or article). Parts are mainly factual claims, but we also consider URLs to other articles and metadata such as the website where the document was published.

- assessing the credibility of individual parts: factual claims are compared to previously factchecked claims (this is the most valuable credibility signal, hence the name for this module), linked documents are again divided in parts, and website credibilities are looked up using MisinfoMe (see above)

- combining the estimated credibilities for the parts into an overall credibility for the input document

Although these 3 steps sound simple, they require quite a few submodules:

-

- Checkworthiness model: Factual claims are actually not that easy to identify: although it’s easy to split a document into sentences, we then have to determine which sentences are making factual claims that are worthy of being verified. We do this to avoid checking the credibility of sentences which are just opinions (as you can imagine, a large proportion of sentences on Twitter are not factual claims). We use a machine learning model which has been trained on a dataset of 7482 sentences, 5 thousands had been labeled as being checkworthy and 2 thousand were non-checkworthy. The dataset combined sentences from three distinct datasets: CBD (mostly political debates and news), Clef’20T1 (tweets) and Poynter data (covid-19 claims). We tested our model on around 7 thousand test sentences (mostly from political debates, but also on claims about Covid-19) and our model obtained close to 90% accuracy.

- Semantic similarity model: Once we have identified factual claims (with high confidence), we need to find whether there are existing claims that have been factchecked. For this we use a database of close to 100 thousand claims that have been factchecked over the last decade. Reputable fact-checkers publish these in a format that makes it easier for search engines to index these and hence for anyone to find them. However, we don’t want to just find these using keywords, because specific words can change (e.g. we want to match a claim whether they use the word “vaccines”, “jabs” or “innoculation”). Also, keywords don’t provide sufficient context about what is being claimed (there are hundreds of claims about vaccines). Therefore, we use a model to rate the sentence-level semantic similarity. between two sentences as a number between 0 and 5: a score of 0 means that the two sentences are completely unrelated, a score of 3 means that the sentences talk about related topics, but ultimately say different things. A score of 4 means that the sentences are saying similar things, but some details may differ. Finally a score of 5 means that, although the words may differ, the sentences are conveying exactly the same message. We trained a deep learning model on around 50 thousand pairs of sentences and our model’s predictions co-incided with human ratings in about 83% of the cases.

- Stance detection model: Semantic similarity tells us that two sentences may be conveying messages which are very similar. However, in order to correctly propagate credibility scores, we have to know whether the messages as agreeing of disagreeing with each other. This is exactly what a stance detection model does: it tries to predict a label that best describes the relation between two sentences: agree, disagree, discuss or unrelated. Discuss and unrelated verify a relatively low semantic similarity score. If sentences S1 and S2 disagree and S2 has been found to be “not credible” by a factchecker, then we should conclude that S1 is credible. We trained a deep learning model to perform stance detection based on a dataset of 75 thousand pairs of sentences. Our model obtained 92% accuracy, although in subsequent testing it turns out that the accuracy is quite a bit lower in actual tweets and news (as low as 60%). In machine learning, we say that the model does not “generalise” well to new cases. The main reason for this is that the 75 thousand pairs of sentences used during training only reflect a narrow set of all the claims we encounter in the real world (for example, the model had not seen many claims about coronavirus).

- Normalising factchecker labels: Each factchecker uses its own set of labels for describing the accuracy of the reviewed claims. Unfortunately, this means there are hundreds of labels (in different languages!) in use by dozens of reputable factcheckers (and this list is continuously growing). Therefore we need to map these existing labels to our conceptual model of credibility. We developed a long list of heuristics (rules like if the label is one of ‘false’, ‘inaccurate’, ‘falso’, ‘faux’, ‘keliru’, then the credibility value should be -1.0 with confidence 1.0).

- Automatic Machine Translation: Reviewed claims can be in different languages, but also the input document can be in different languages. To be able to support as many languages as possible, we use machine translation (i.e. a system similar to Google Translate) so that we always deal with sentences and documents in English. Depending on the language, this can negatively affect the accuracy if the translation is not faithful to the original meaning.

- Aggregation of credibility scores: At various steps in our program, we need to combine credibility and confidence scores as we move from parts to the whole. We experimented with various rules on how best to do this: from keeping the least credible part (an informing claim does not make up for a misinforming claim) to taking into account the confidence (we just ignore any credibility score if the confidence is too low). This is a balancing act, we can detect more misinformation if we allow a lower confidence (but we also introduce more errors by doing this).

Content Stance module

Finally, a third service is designed for content analysis, which assigns the credibility of a tweet by analyzing the source tweet and its replies. The service has been developed using a two-phase pipeline based on ML models, and the pipeline is trained with the RumourEval2019, twitter15/16 and co-inform datasets. The pipeline assumes that the source tweet contains rumor. It first detects the stances of the replies as supporting, denying, questioning or commenting towards the rumor. Then, a second ML-based model evaluates the stances along with textual features and predicts the probability of the veracity for true, false and unverified cases. Finally, the credibility of the tweet is assigned by aggregating the for each veracity values.

Subscribe to our newsletter

Get our latest project updates, news and events announcements first!

Co-inform project is co-funded by Horizon 2020 – the Framework Programme for Research and Innovation (2014-2020)

H2020-SC6-CO-CREATION-2016-2017 (CO-CREATION FOR GROWTH AND INCLUSION)

Type of action: RIA (Research and Innovation action)

Proposal number: 770302