Blog

Co-inform’s innovative technical solutions to tackle misinformation on social mediaAuthor: Ronald Denaux, Senior Researcher at Expert System Iberia

We can summarise Co-inform’s core technical solution as a “decentralised, transparent and community-driven misinformation linking system”. Let’s break down this mouthful into bite-size chunks.

Misinformation linking is similar to misinformation detection, however the latter is intended to help professional users (journalists or fact-checkers) in finding potential disinformation. Misinformation linking on the other hand, is designed to be useful to the general public; in order to achieve this we impose additional transparency and explainability conditions. Like misinformation detection, linking is based on automated algorithms which aim to predict whether a given content online is misinformative or not. For this purpose the Co-inform team has built modules based on:

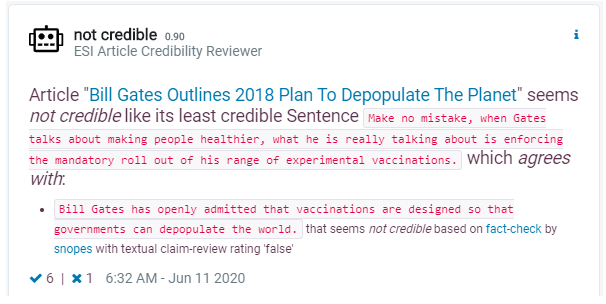

- finding related existing credibility signals available online such as (i) claim reviews published by reputable fact-checkers (e.g. PolitiFact, Snopes, Newtral); (ii) reputation ratings for websites by organizations like NewsGuard and others.

- estimating the reputation of social media accounts based on their previous posts

- estimating the accuracy of content based on the reactions to it on social media

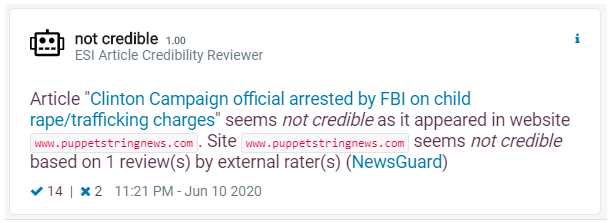

While existing misinformation detection tools use similar algorithms, in Co-inform we are aiming to make the results transparent: it is not sufficient to have a black-box system predicting whether content online is misinforming, the system must also provide a link (or path) between the reviewed content and the credibility signals online that were used as evidence. This (i) allows users (professional or not) to verify the automated rating and (ii) nudges users to consider credibility signals they may have missed otherwise. As a result, the Co-inform services are designed to act more as amplification of the work done by fact-checkers and reputation aggregators, rather than as a misinformation detection tool per se. The following figures show examples of explanations our systems generate.

The second innovation of our solution is that it is designed to be decentralised. At its core we have defined an extension to Schema.org (in general) and ClaimReview (in particular), which allows anyone (including automated systems) to assess the credibility of any content and share it online; the key is that any such assessment must be backed by some evidence (which is also crucial for transparency). We believe such standardised formats for describing credibility reviews on a web scale are crucial to enable collaboration between fact-checkers, social media platforms and the general public without relying on a single organization. This is important not only for matters of trust, but also scalability: global giants often rely on manual misinformation tackling which limits scaling and is an obstacle to a quick reaction when it comes to tackling fake news. Journalists and policy makers, who are required to make fast and informed decisions, would largely benefit from such technology in their everyday work practices.

Finally, we have designed the Co-inform technical solutions to be community-driven. We allow the community of users to influence and manage how all these credibility assessments are aggregated via user feedback (on the accuracy of credibility assessments) and policy rules (e.g. about whether a fact-checker, author, or automated algorithm should be trusted). For example, in the first example above, we see that 14 users agreed with the system’s rating while 2 disagreed.

The Co-inform technical solutions are accessible to end users via two tools (i) a browser plug-in for citizens to help them stay vigilant about misinformation online and avoid becoming spreaders (as a proof of concept, this extension currently is focused on content shared via Twitter); (ii) a dashboard where policymakers and journalists are able, through visualisation and graphs, to track misinformation trends around topics of interest.

For those who want more technical detail, you can view our recently accepted paper “Linked Credibility Reviews for Explainable Misinformation Detection”, which has been accepted at the International Semantic Web Conference. This paper also presents evaluation results showing that one of our modules achieves state-of-the-art performance on several datasets.

Co-Inform’s mission is to foster critical thinking and digital literacy.

Academic surveys have shown that online misinformation is becoming more difficult to identify. Online misinformation has the potential to deceive even readers with strong literacy skills. Our goal is to provide citizens, journalists, and policymakers with tools to spot ‘fake news’ online, understand how they spread, and obtain access to verified information.

Subscribe to our newsletter

Get our latest project updates, news and events announcements first!

Co-inform project is co-funded by Horizon 2020 – the Framework Programme for Research and Innovation (2014-2020)

H2020-SC6-CO-CREATION-2016-2017 (CO-CREATION FOR GROWTH AND INCLUSION)

Type of action: RIA (Research and Innovation action)

Proposal number: 770302