Blog

Combating misinformation: the importance of human judgementAuthors: Martino Mensio (Research Assistant), Lara Piccolo (Research Fellow), Tracie Farrell (Research Associate) at the Knowledge Media Institute, The Open University

Limiting or even stopping misinformation from spreading is a concern that is currently challenging social media platforms, media players and citizens in general. Distinguishing facts from fiction, though, is not always a deterministic task; subjective forces like human values, beliefs and motivations come to play when judging a piece of information and deciding whether or not to promote it.

Problems of manual and automated fact-checking

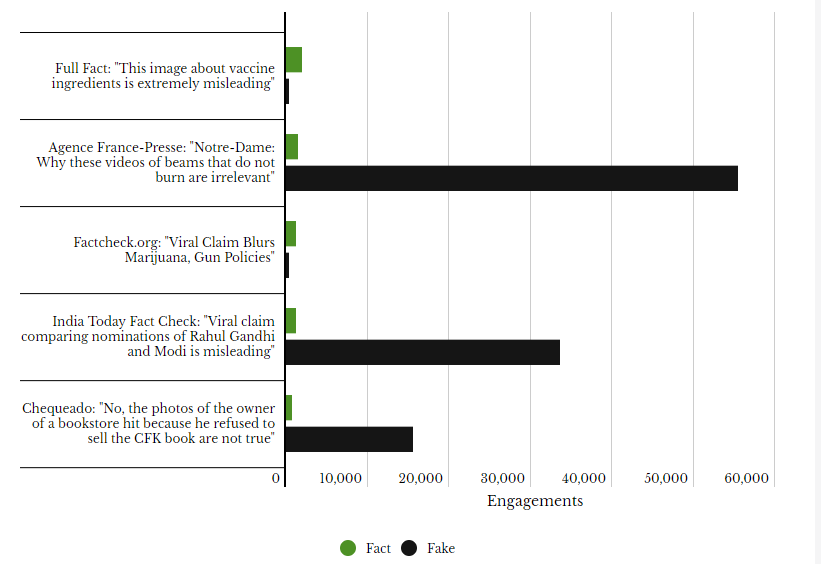

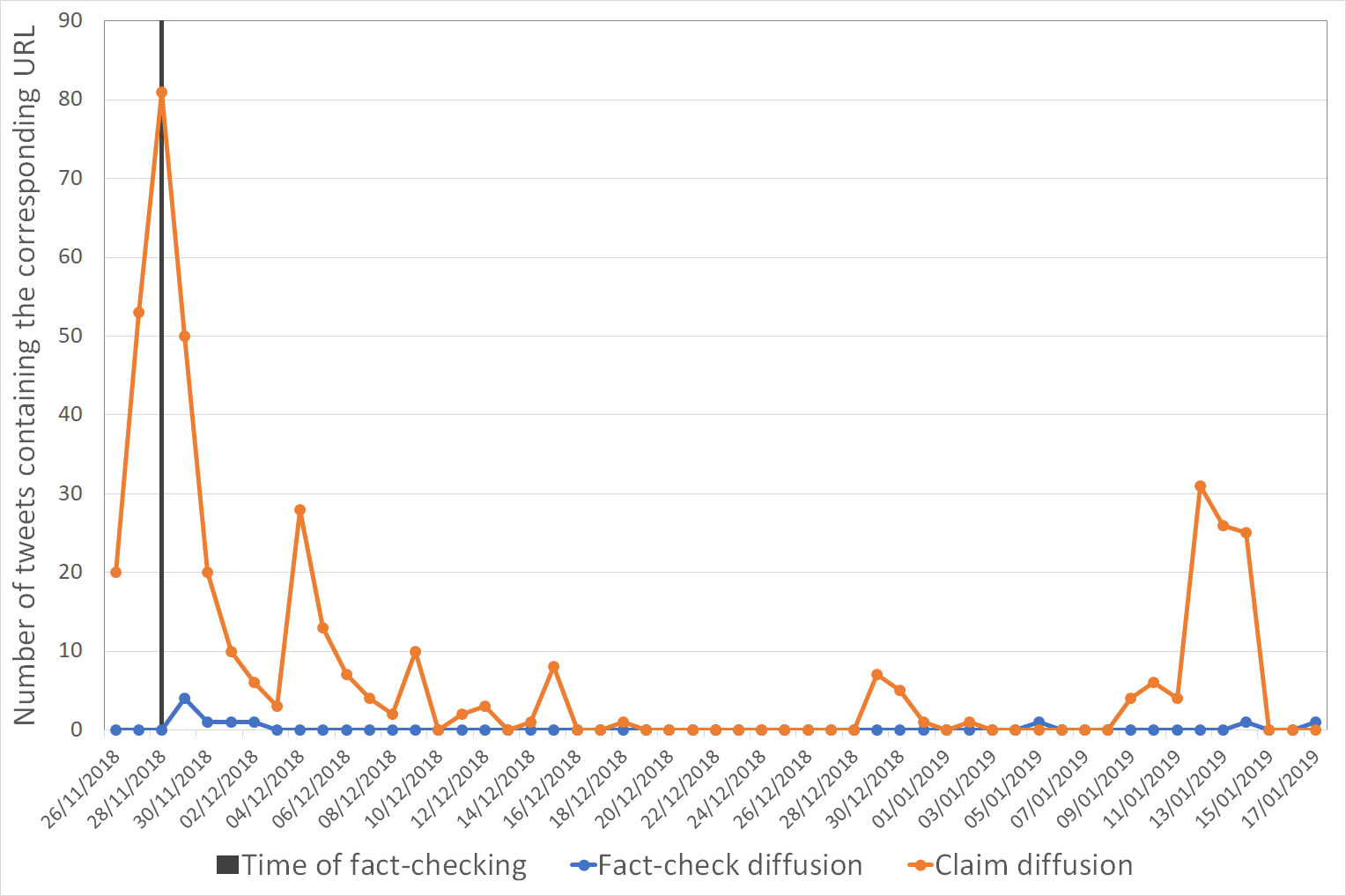

Fact-checkers have played an important role in this battle by critically evaluating claims and making the information about their veracity and credibility available for citizens. They are usually journalists equipped with skills and a methodology to detect verifiable claims and look for pieces of evidence, committing to a set of principles that maximise neutrality and adherence to the truth. However, the volume and speed of information circulating on social media are beyond the reach of any network of fact-checkers, especially due to the fact that most of the curation assessment is still done manually. And there is also the problem of reaching the citizens, as can be seen by comparing the reach of debunked hoaxes with the corresponding fact-checking articles.

The recirculation of an already debunked story compared with the diffusion of the corresponding fact-check

Automated and AI-featured tools are expected by some to be the solution for assessing the “truth” and defeating the different forms of misinformation. Regardless of the speed and the ability to process huge volume of data of computational approaches, it is very likely the expectation will fail if the intelligence in place is purely artificial.

At the current state-of-the-art, AI-based tools still face several limitations including their accuracy, when the output provided is wrong; the eventual non-neutrality in the judgments inherited by design, leading to bias and prejudice and, connected to that, the lack of transparency to end users on how the decisions are made.

As pointed out by the European Parliament, such limitations may pose a threat to freedom of expression, pluralism and democracy. For this reason, the EU Parliament argues in favour of regulating AI for content moderation only with strong human participation, a practice already adopted for some fact-checking agencies.

Extended Intelligence: combining efforts of fact-checking with the power of AI

Extended Intelligence is an approach that combines AI with the expertise of fact-checkers or citizen’s critical thinking, not replacing humans in decision-making processes.

The collaboration between fact-checkers and AI needs to be quite tight. Experts’ knowledge can be used to train AI models in learning features and extracting insights. A number of openly available fact-checked claims can also be used to this end (i.e. Google Fact Check Explorer and DataCommons). Based on learned cues, AI models can predict the credibility of new stories, or they can also provide related documents to speed up the fact-checkers analysis.

Transparent models allow experts to investigate and refine the assumptions and features that led to results. The experts then can also verify the predictions and validate the results before publishing them, as the accountability belongs to the journalists.

AI and experts merge their intelligence in perfect tandem, avoiding repetitive work by the experts and wrong decisions by AI tools.

Promoting critical thinking

Trusting information from social media platforms has been an increasing challenge to citizens in general. Major platforms (e.g. Facebook, YouTube) and other tools (e.g. browser extensions, searchable collections) are trying to better connect citizens with fact-checked information.

Properly providing accurate information is an essential measure. However, research has shown that being aware of the factual information is not enough to stop misinformation spreading. In a scenario of media pluralism, technical solutions have to take humans’ subjectivity into account and invite people to critically think, estimate risks and judge the impact of that information to the society.

Instead of censoring or banning content, tools should instigate users’ curiosity, inviting them to act as active thinkers by investigating details and explanations and, consequently learning how to discriminate good quality content.

Open challenges

With extended intelligence, AI is a bridge to convey and amplify expert opinion, both in terms of coverage and reach. Extended coverage means being able to predict the credibility of a certain piece of news with a certain level of confidence. Instead, the extended reach manifests in making the expert judgment more accessible, allowing more users to be in contact with it.

However, a set of challenges remains open. One example is in defining the boundary between opinion and facts. How to distinguish opinion and partisan interpretation from the misinforming manipulation of events, in a scenario where points of view are and must be, many and different?

The answer to this question sets the direction between improving society by promoting transparency and dialogue or, on the other side, to block freedom of expression by using censorship. This is a problem that is not new with manual fact-checking, and with Extended Intelligence, we need to be sure to empower the efforts in the right direction.

For this reason, the tools that will be developed during the Co-Inform project will focus on widening the points of view, conveying the expert knowledge and stimulating critical thinking.

Subscribe to our newsletter

Get our latest project updates, news and events announcements first!

Co-inform project is co-funded by Horizon 2020 – the Framework Programme for Research and Innovation (2014-2020)

H2020-SC6-CO-CREATION-2016-2017 (CO-CREATION FOR GROWTH AND INCLUSION)

Type of action: RIA (Research and Innovation action)

Proposal number: 770302